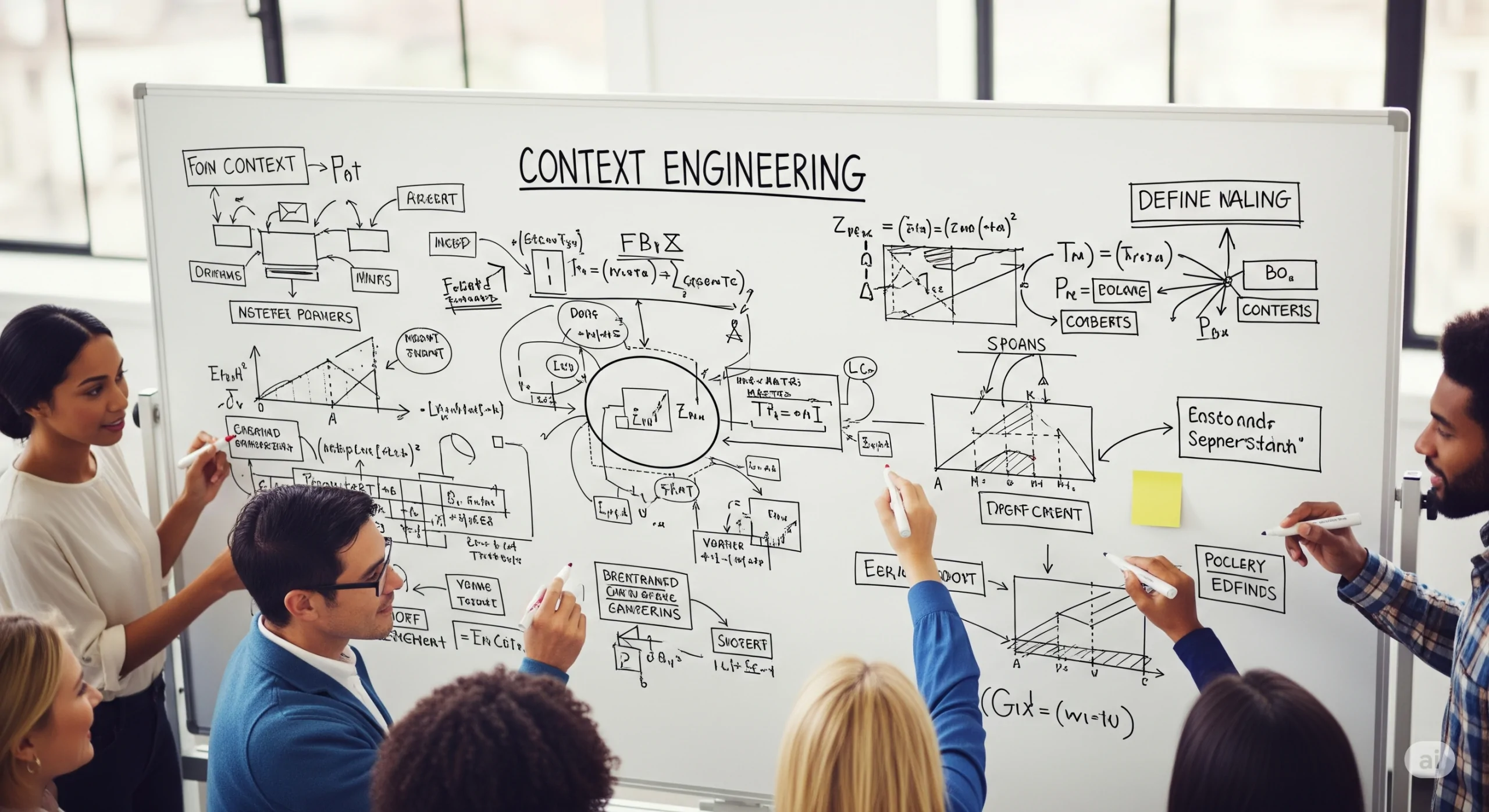

Context Engineering: The Hidden Engine Behind Powerful AI Experiences

Context Engineering: The Hidden Engine Behind Powerful AI Experiences

Introduction: Moving Beyond Prompt Engineering

In the early days of working with large language models (LLMs), prompt engineering felt like the holy grail. Tweak a few words, add a tone, throw in a clever example—and magic happened. But as we push LLMs into real-world applications, we’re hitting a wall: prompting alone isn’t enough.

Enter Context Engineering—a more systematic, comprehensive way to give LLMs what they need to perform intelligently and consistently.

What Is Context Engineering?

Think of context as everything that surrounds a prompt: instructions, history, data, memory, available tools, and even the output format. While prompting is about what you say, context engineering is about what the model knows before you say it—and how it interprets it.

A robust context includes:

- System Instructions – Set the tone, rules, and style.

- User Task Cues – The actual prompt or user question.

- Conversation History – Memory of prior interactions.

- Personal Memory – User preferences, goals, or saved projects.

- External Knowledge – Retrieved documents, database queries, web snippets.

- Tools & Functions – What the model can actually do beyond text.

- Schemas or Output Structures – Defined formats (e.g., JSON, Markdown).

Why It Matters: From Chatbots to Co-Pilots

Let’s say a user types: “Reschedule my 2 PM with Sarah.”

If the model has no calendar, no prior context, and no tools, it might guess wildly. But with context engineering:

- The model accesses your schedule.

- Knows who Sarah is.

- Understands your meeting priorities.

- Has tool access to reschedule and notify.

The result? A smart assistant, not just a chat model.

From Prompt to Context System: A Real Workflow

To build systems that think and act, we need to treat context as a pipeline:

- Ingestion: Pull relevant data (documents, APIs, user settings).

- Processing: Clean, summarize, format for token efficiency.

- Context Assembly: Build modular blocks of system prompt, tools, retrieved info, and schema.

- Execution: Pass to LLM, interpret output, and potentially call actions (via APIs).

Each part must be modular, traceable, and optimized.

Principles of Good Context Engineering

✅ Use structured context blocks—don’t overwhelm the LLM with raw logs.

✅ Compress history and memory where possible—summarize rather than repeat.

✅ Format instructions clearly—consistency in YAML/JSON-style contexts helps.

✅ Limit noise—too much irrelevant info = worse performance.

✅ Dynamically inject only what’s needed at runtime.

Tools and Ecosystem

Several open-source tools and frameworks support context engineering:

- LangChain – For chaining tools, memory, and retrieval.

- Haystack – Great for retrieval-augmented generation (RAG).

- AutoGen – Multi-agent orchestration with dynamic tool routing.

- GitHub: Context-Engineering – Schemas, examples, and best practices.

These help orchestrate inputs, shape outputs, and manage token budgets.

Common Challenges

- Token Limits: Even GPT-4 has boundaries. You can’t feed it a novel every time.

- Non-Determinism: Great context still can yield fuzzy responses.

- Overengineering: Complexity for the sake of it doesn’t help.

Final Thoughts: A New Era of Intelligent Interfaces

Context Engineering is what turns LLMs from toys into tools. It’s where design, product, and engineering intersect to build powerful, personalized AI experiences.

If you’re building AI systems that need to scale, adapt, and respond like real assistants—start thinking less about prompts, and more about the systems that deliver the right context at the right time.

Because at the end of the day, an LLM is only as smart as what you feed it—and how.